I made my multi-arch Docker image 10x faster

This blog post discusses a performance issue affecting multi-architecture Docker images on Apple Silicon, and how to fix it.

May 24, 2024

Benny Cornelissen

Cloud Consultant

But before I spill the beans on how I managed to do that, let’s start at the beginning…

Once upon a time…

… there was a consulting company called Skyworkz. Like many other consulting companies, our clients often require us to send them resumes of our people before starting a new project. In many cases, a resume is what ‘gets you in the door’, so we take our resumes seriously.

A few years ago we collectively got fed up with maintaining our stack of resumes using document editors like Microsoft Word. We hated dealing with layout issues, and we also disliked that our resumes lacked a uniform structure. We also had some ideas about having our resumes machine-readable so we could easily gain useful insights into our team’s collective skills and experience.

Hacking the Resume

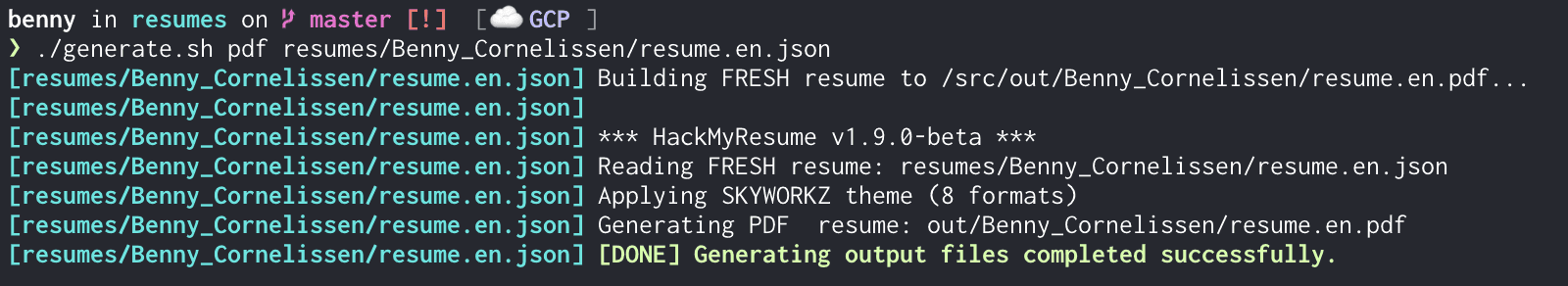

To address this we switched to a JSON-formatted resume, with an open-source generator that can convert them to PDF, HTML, Markdown, Word, or all of the above. Now all we had to do was write some JSON, run a shell script that ran a Docker container, and push our JSON changes to Github. In theory…

In reality, local development is hard, and I encountered our setup being broken (for me, at least) about as often as I had to use it. So when the time came to update my resume recently, I decided to thoroughly refresh the setup. Scripting was completely rewritten, a Gitpod setup was added, and it all seemed to work quite nicely. To the point where running ./generate.sh pdf would automatically find all resumes (regarding of format), validate them, and generate PDF versions. For 30 resumes, that would take roughly 15 seconds using parallel sub-shells (which is a topic for another blog). Cool!

Make it fast

Although I thoroughly reworked all the scripting we had around the Docker container that actually does the generating, I hadn’t actually touched the Docker image just yet. It wasn’t until I rebuilt the image locally that I considered I had been running an AMD64 image on my M1 MacBook all this time. My locally rebuilt image was twice as fast as the one I had been using. Awesome!

But, this led to a new issue: Gitpod environments run on AMD64 architecture, and so do the machines of about 30% of the people within Skyworkz. So either I had to maintain separate images for linux/amd64 and linux/arm64, or I had to build a multi-architecture image that contained both.

Building a multi-arch Docker image

Since I hadn’t built multi-arch Docker images before, I took to the internet. A quick Google search led me to a very straightforward way to simultaneously build for both platforms and package it into a single Docker manifest, as well as pushing it to Docker Hub. One-stop-shop: I love it.

I did have to switch my Docker Desktop to use containerd for the image store to make this work, but otherwise, the building worked without issues. Or so I thought…

And then we hit a snag…

When I first ran our ./generate.sh script with the new multi-arch image I was shocked. It took nearly 40 seconds to generate PDFs for 30 resumes. That’s over double the time it took for the AMD64 version through Rosetta, and almost 10x slower than the pure ARM64 image I built earlier! That can’t be right..

After making sure I wasn’t lagging behind on Docker Desktop updates, I tested again. Same result. I tested on a different Mac. Same. Tested with a single resume to rule out resource constraints. Same. Then I tested in Gitpod. No issues at all! The multi-arch image runs about as fast as the pure AMD64 image. It seems our issue is limited to Apple Silicon then?

Give it some old-fashioned…

At this point I started to have some doubts about buildx . Even though it’s been around for roughly 5 years (according to the project's release history), the most recent version of buildx at the time of writing is 0.14.1. Not exactly a version number you’d associate with ‘mature’ just yet.

But multi-architecture manifests have been along longer than that. So I took to the internet once more to learn the ‘old-fashioned’ way of building an image like that. Sure enough, after a few minutes I had myself a solution.

Building a multi-arch Docker image: the old-fashioned way

The ‘pre-buildx’ way of creating a multi-arch image is creating a Docker manifest that combines to images for specific architectures. It’s not too different from what buildx is supposed to be achieving, but in this case you need to do all the legwork yourself.

But now that I have 2 multi-arch images; can I see any differences? I decided to inspect the different image manifests, using docker manifest inspect <image>:<tag> . First, the one I built with buildx, then the ‘old-fashioned’ one.

OK, they’re different allright. Different media types, but also, why does the buildx variant have 4 entries in the manifest, and why is the architecture for 2 of these entries ‘unknown’? I tried generating the buildx variant again, but ended up with the same result.

Getting some old-fashioned performance gains…

In the end, I’m not invested enough in buildx to spend hours figuring out why it was slow. I wanted a multi-architecture image that wasn’t slow, but I hadn’t tested it just yet. So I decided to run some tests with all 4 variants that I now had:

- arm64: ARM64 image to run natively on Apple Silicon

- amd64: AMD64 image to run natively on Intel/AMD hardware

- multiarch-buildx: Multi-arch using buildx

- multiarch: Multi-arch using docker manifest

For the test setup, I would run the ./generate.sh script in a for loop, using the different Docker images. I would clean up the output directory (./out) between runs so it would always write a new PDF file instead of overwriting an existing one. I would also run the test for both a single resume and for all 30 resumes in parallel.

Victory at last!

Extensive testing and retesting across multiple machines consistently showed results similar to what you can see above: our ‘old-fashioned’ multi-arch image showed identical performance to the native ARM64 image, which is what we would expect. Meanwhile the image we created with buildx is 9-10x slower.

I also tested in Gitpod to check whether our old-fashioned approach had negative side-effects for the Intel/AMD side of things, but luckily all was good there as well.

Wrapping up

If you made it to this point: thanks for bearing with me. I hope you found this useful. If you just scrolled down to the end to get the beans, here’s the short version (you can scroll back up for details):

TL;DR → Multi-arch images created for both linux/amd64 and linux/arm64 with docker buildx seem to perform really poorly on Apple Silicon, up to 10x slower than single-arch ARM64 image. Building the multi-arch images by combining specific images into a single manifest using docker manifest create resulted in performance being identical to the single-arch ARM64 image, without any negative side-effects.